The latest AI craze - why does ChatGPT have cyber-security professionals concerned?

31 Jan 2023, by Slade Baylis

The rise of artificial intelligence is something that’s commonly been the premise of many science fiction novels, TV shows, and movies - though in most portrayals it’s something that’s usually depicted as happening in the far-flung future. With the recent development of Artificial Image Generation tools and the subsequent internet storm that followed, many people are beginning to question how far off the development of actual artificial intelligence is. In line with this, a new AI technology has become the latest internet craze, and it’s potentially even more impactful.

When it comes to the process of automation allowing tasks to be performed more economically - and in turn making certain jobs redundant - one often pictures a factory floor with machines being rolled out to become part of an ever-more optimised production line. In this vision of automation, it’s often blue collar jobs that are seen as being replaceable, with white collar jobs often being seen as untouchable and an area where only humans would ever be able to fulfil these roles. However, with the rise of language model AIs like ChatGPT, this assumption is quickly being challenged.

What is ChatGPT? A crash-course on chatbots and conversational AI

When it comes to interacting with computers via conversation, much like you would interact with other people, one of the earliest instances could be seen to be early text-adventure games. The first of its kind, and the first ever text-adventure game was called Colossal Cave Adventure (also referred to as just “Adventure”). It placed players within a gigantic cave network, requiring them to interact with their surroundings through nothing but the written word. Players needed to describe where they wanted to go, what items they were to use, as well as how they were to use them in order to navigate the caves and solve the challenges they came across.

Since then, interacting with programs through conversation has come a long way, with them no longer just being confined to people interacting in fictional worlds within computer games. On this front, one of the more interesting developments was the creation of “chatbot” programs – which is a computer program that uses artificial intelligence and natural language processing to respond to queries that people make to it. With users of this software being able to ask questions and interact with the software via text, and the software attempting to respond in the same way an actual person would.

In the past, most implementations of this were very simplistic – not necessarily in their architecture or design, but rather in their responses. For example, one of the most commonly used chatbots was called CleverBot – with it, users were (and still are) able to ask it questions via text and have it respond in a human-like way. Given only a few minutes of conversations though and due to the responses given often feeling out-of-place, being full of non-sequiturs, and also lacking the basic understanding of conversational context that actual humans would naturally have (or most people would anyway!) - many people who used such systems were able to determine that something wasn’t quite right. This shouldn’t be too surprising - as even the notification displayed before you are able to use it - mentions that the tool is merely “pretending to be human”, “does not understand you”, and "cannot mean anything it ‘says’ ”.

In the same line, organisations have also gotten on the band-wagon to leverage these technologies. Back in 2017 Telstra developed and released their own virtual chatbot, dubbed “Codi”, which was designed to help their customers answer simple frequently asked questions before directing them to chat with an agent if required. Since then, Codi has evolved into a full virtual agent with the ability to help customers pay or download bills, troubleshoot internet issues, and more.

However, in this realm of AIs that attempt to convincingly communicate, ChatGPT is the new kid on the block, and it’s due to its perceived leap in conversational ability that is taking the internet by storm. ChatGPT is one of the most, if not the most, advanced chatbot AI implementations to date. Developed by OpenAI, an artificial intelligence research company based out of the US, this AI is the latest in a long line of tools they’ve released, such as their text-to-image generator DALL.E 2 which also went viral the world over.

The headlines about ChatGPT are capturing even more attention though, as the implications could be even more severe. As reported by the ABC2, this new technology opens the door to the possibility of widespread cheating on homework and take-home assignments, with many educators scrambling to rethink the nature of their assignments. Not only that, but when put to the test, researchers who aimed to test the limits of such technology found that the AI tool was able to achieve over 50 percent in one of the most difficult standardised tests around - the US Medical Licensing Exam (USMLE)!

When it comes to providing a quick introduction on this new technology though, we thought we would let it speak for itself. Not only can it respond to questions that are posed to it, but it can also tailor the answer based on the audience it’s intended for!

For example, here is how it responded to the prompt: “Explain what ChatGPT is to a general audience”

Alternatively, here is how it responded to the prompt: “Explain what ChatGPT is to a technical audience”

As you can see, not only is ChatGPT able to communicate with its users in a much more convincing way - when evaluating how “human-like” its responses are – but it’s also able to answer complex questions and carry out many advanced tasks. It’s that more advanced functionality that has some people concerned, specifically how it could potentially impact “knowledge work”.

Disruption to knowledge industries – How ChatGPT and other language model AIs could affect knowledge-based professions

It’s a question that most people would have thought at some time or another – will machines and technology take away our jobs? In the past this was mostly thought to have been a question limited to the realm of manual labour, or jobs that involved repetitive, non-creative endeavours. However, in a world that now contains AIs that can generate new and original pieces of art and music, this assumption is being challenged. With ChatGPT specifically, not only does it possess the ability to convincingly communicate, its ability to answer complex (sometimes industry specific) questions in largely reliable ways could – at least in principle – disrupt industries previously thought immune to automation.

The term “knowledge workers” was coined by the management consultant Peter Drucker in 1959 for people engaged in non-repetitive problem solving – and it’s these types of professions that appear to be next on the proverbial chopping block. For example, one of the more surprising features of ChatGPT is its ability to generate functional code in a variety of programming languages, including JavaScript, Python, and PHP. That’s correct – users are able to specify the ultimate goal or functionality they are looking to achieve, specify the language they would like the code to be written in, and are able to let it do the heavy lifting!

Not only that, but when supplied with pre-existing code and an explanation as to what the code is intended to do, it’s even able to debug existing or potential issues with it. Though limited at the moment, this early demonstration of the capabilities of language model AI show that even professions that involve highly creative problem-solving such as programming could see massive disruption from new technologies on this front.

However, it’s not just programmers that could be affected by this new technology – as mentioned by ChatGPT itself during its initial self-introduction, ChatGPT is able to be “fine-tuned” and trained on specific data sets in order to improve its performance when responding to different types of questions. As just one example, one could imagine it being used within the legal profession, having been trained on data about prior cases in order to quickly provide relevant precedents that could be related to new upcoming cases. With this technology being hot off the press, there are bound to be many more implications for many more industries than we can possibly predict today.

Automated cyber-crime and polymorphic malware – Cyber-security experts concerned about AI and the automatic creation of malicious software

With code generation being one of the earliest provided examples of the capabilities of this new technology, it shouldn’t come of much surprise that this has some cyber-security experts worried. As covered in our Cyber-attacks on Australian businesses up 89% article, one of the unfortunately re-occurring trends is the increase in Ransomware-as-a-Service (RaaS) - services wherein malicious actors create ransomware software that is then leased to cyber-criminals to use. With AI now able to generate functional code, one can easily picture a world in which all that a criminal need do is ask the AI to create malware to exploit a newly discovered security flaw and it sets off to work to do the dirty deed.

As reported by CRN last week1, researchers from security vendors including CyberArk and Deep Instinct posted technical explainers about their experiments attempting to get ChatGPT to generate malware. What they found should be concerning to all, as they found that not only could it be used to generate malicious code (including ransomware), but that technically, it’s able to generate “polymorphic malware”.

One of the most common ways anti-virus applications (and most end-point protection applications) detect, prevent the execution of, and remove malware - is through what’s known as “signatures”. These anti-virus applications come with databases containing the signatures of known exploits, which are used to compare against any scanned file for malicious contents. There are of course many other techniques used to detect malicious code, though the concept of looking for “known bad code” is fundamental to most anti-virus implementations. The reason why this method of detecting malicious code is reliable, is because such malicious code is often circulated widely and thus detecting it in one location can allow you to know that it should be blocked in others.

“Polymorphic malware” however, is a type of malware that tries to evade detection through constantly changing its own fundamentally identifiable features - this can include file and variable names, types of encryption, and so forth. In the past, such polymorphic behaviour would have to be specifically created by sophisticated black hat hackers, however with AI such as ChatGPT able to create many different variations of code, it could be used to easily generate new malicious code in each instance that it’s used.

It’s this level of modularity and adaptability that has cyber-security experts worried about its ability to be highly evasive of security products that rely on signature-based detection. With this being the case, we recommend that all our clients look to use security products that implement some form or EDR (Endpoint Detection and Response) service. Instead of relying on detecting malicious software through signatures, EDR solutions look to recognise and detect threats through real-time monitoring, and via analysing behaviour rather than code. We’ll cover this in more detail in a new article in the not-too-distant future, but by looking at patterns of behaviour and analysing what isn’t normal, and thus potentially harmful, they are able to be a bulwark against these sorts of infinitely-variable attacks.

Summary – A revolutionary tool with implications for cyber-security and job security

As with most technological developments, it’s likely that ChatGPT (and other language model AIs like it) will cause disruptions in many industries, but even with this disruption, people will be quick to adapt. Whilst the technology in its current early stages is causing worry among some, it will likely be those who can choose to utilise this technology to their benefit that will find themselves empowered by it instead.

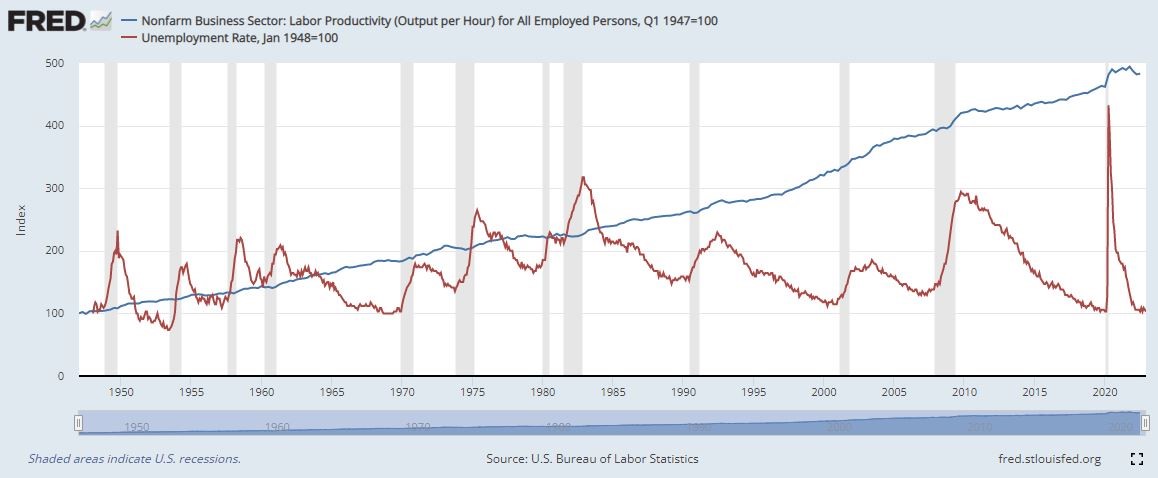

The good news is that data from FRED (Federal Reserve Economic Data) - an online database consisting of hundreds of thousands of economic data from national, international, public and private sources – seems to reinforce this optimistic outlook. As shown below, all the increases in automation from the 1950s onwards have dramatically increased the productivity of labour, making people around five times as productive as they were, whilst having no long-term upward trend of unemployment3.

In much the same way that people adapted to the invention of the automobile, the implementation of the assembly line by the Ford Motor Company, or the increasingly popular ride-sharing services like Uber and Lyft, whilst there will inevitably be an effect on the roles that people perform, it’s likely that more productive ones will replace them. The primary hope for all should be that as certain skills or tasks become de-valued or automated, that hopefully the transition to alternative jobs or careers is able to be as painless as possible.

Just as a reassurance to our readers, for the foreseeable future I’ll remain the primary article writer here at Micron21 – I’ve yet to be superseded by a ChatGPT-based “Virtual Slade”, at least for now!

Sources

1, ChatGPT malware shows it's time to get 'more serious' about security, <https://www.crn.com.au/news/chatgpt-malware-shows-its-time-to-get-more-serious-about-security-590023>

2, ChatGPT appears to pass medical school exams. Educators are now rethinking assessments, <https://www.abc.net.au/news/science/2023-01-12/chatgpt-generative-ai-program-passes-us-medical-licensing-exams/101840938>

3, US Labor Productivity (Output per Hour) for All Employed Persons vs US Unemployment Rate, <https://fred.stlouisfed.org/graph/?g=ZnDN>